Unleashing GPT-4 Omni: Harnessing Data Insights with AI

In the fast-evolving landscape of artificial intelligence, GPT-4 Omni stands at the forefront, promising not just advanced language processing capabilities, but also the potential to revolutionize how businesses derive insights from their data. Imagine a scenario where your finance team or C-level managers can seamlessly interact with your organization's data using natural language, thanks to the integration of GPT-4 Omni into your systems.

The Power of GPT-4 Omni in Data Analysis

GPT-4 Omni, developed by OpenAI, represents a significant leap forward in AI technology. Unlike its predecessors, GPT-4 Omni is designed to handle a broader range of tasks, including complex data analysis and generation of insights. This capability makes it an ideal candidate for businesses looking to democratize data access and empower non-technical users to explore and understand data in real-time.

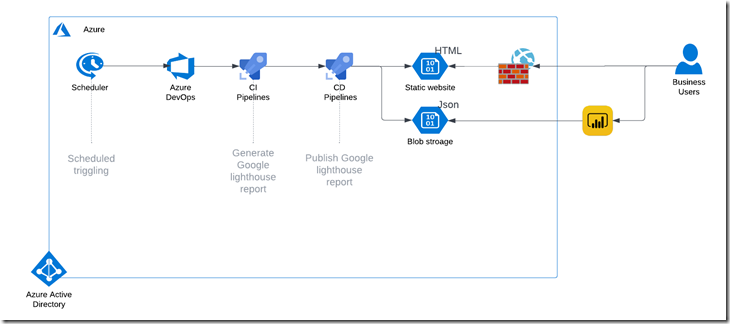

Addressing Ad-Hoc Requests with Azure OpenAI Chatbot

Imagine a typical scenario: your finance team needs immediate insights into recent sales trends, or a C-level manager requires a quick analysis of profitability drivers. With an Azure OpenAI chatbot powered by GPT-4 Omni, these ad-hoc requests can be addressed swiftly and effectively. The chatbot can interact with users in natural language, understanding nuanced queries and providing meaningful responses based on the data at hand.

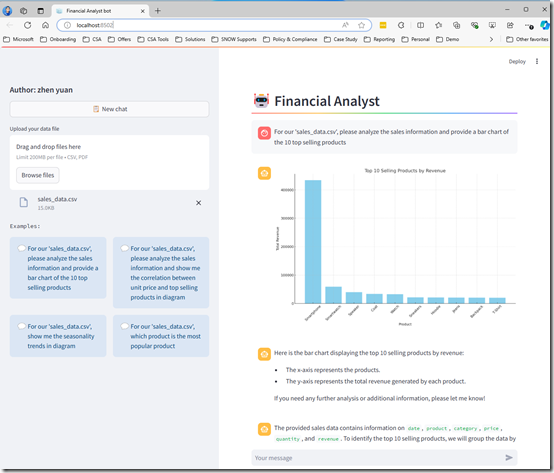

Demo Application: Bringing Data Insights to Life

Recently, I developed a demo application to showcase the capabilities of GPT-4 Omni in the realm of data analytics. In this demo, I uploaded a CSV file containing a sample sales dataset, complete with sales dates, products, categories, and revenue figures. The goal was to demonstrate how GPT-4 Omni can transform raw data into actionable insights through simple conversational queries.

How It Works: From Data Upload to Insights

You can watch the video here

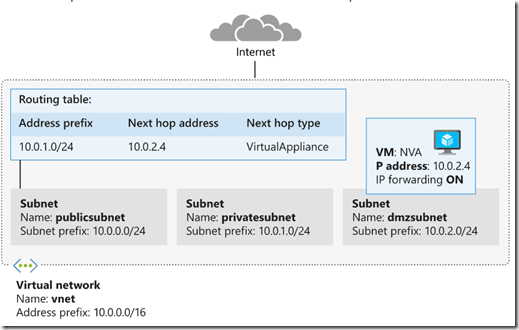

- Data Upload and Integration: The CSV file was uploaded into the demo application, which then processed and integrated the data into a format accessible to GPT-4 Omni.

- Conversational Queries: Users interacted with the chatbot by asking questions such as:

- "What are the top-selling products in the sales data?"

- "Is there any correlation between unit price and quantity sold?"

- "Are there any seasonal trends in the sales data?"

Natural Language Processing: GPT-4 Omni processed these queries, utilizing its advanced natural language understanding capabilities to interpret the intent behind each question.

Insight Generation: Based on the data provided, GPT-4 Omni generated insightful responses, presenting trends, correlations, and summaries in a clear and understandable manner.

The Role of Assistants API

The Assistants API plays a pivotal role in enhancing functionality and integration capabilities. It empowers developers to create AI assistants within their applications, enabling these assistants to intelligently respond to user queries using a variety of models, tools, and files. Currently, the Assistants API supports three key functionalities: Code Interpretation, File Search, and Function Calling. For more detailed information, refer to Quickstart - Getting started with Azure OpenAI Assistants (Preview) - Azure OpenAI | Microsoft Learn

Conclusion

As AI continues to advance, tools like GPT-4 Omni and the Assistants API are reshaping the business landscape, particularly in the realm of data analytics. The ability to leverage AI-driven insights from your own data, through intuitive and conversational interfaces, represents a significant competitive advantage. Whether it's optimizing operations, identifying new market opportunities, or improving financial forecasting, GPT-4 Omni and the Assistants API open doors to a more data-driven and agile business environment.

In conclusion, integrating GPT-4 Omni and leveraging the Assistants API into your data strategy not only enhances operational efficiency but also fosters a culture of data-driven decision-making across your organization. Embrace the future of AI-powered data insights and unlock new possibilities for growth and innovation.