What is Microsoft Copilot Studio?

Microsoft Copilot Studio is a powerful, user-friendly environment designed to streamline the development, management, and deployment of intelligent conversational AI agents. Part of Microsoft's broader Copilot ecosystem, Copilot Studio allows users to effortlessly define topics that guide conversations, implement precise orchestration to ensure dialogues flow logically and contextually, and create customizable actions to seamlessly integrate with backend services and APIs. Built to empower both developers and non-technical users, Copilot Studio simplifies the creation of sophisticated, personalized AI-driven experiences, significantly accelerating time-to-value while maintaining flexibility and control.

In this blog post, I'll dive deeper into how we can effectively leverage topics, orchestration, and actions in Microsoft Copilot Studio to build sophisticated and dynamic conversational agents. I'll first explore how clearly defined topics help your agent better understand user intent, then explain how orchestration enables smooth conversational flows by managing context and transitions between different interactions. Finally, I'll show how incorporating custom actions can extend the agent's capabilities, allowing seamless integration with external services and providing richer, more personalized experiences.

What is a topic?

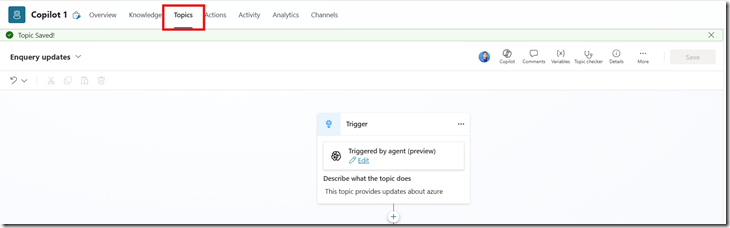

A topic in Microsoft Copilot Studio represents a specific area or scenario of conversation that your AI agent can recognize and respond to effectively. Think of topics as conversational building blocks, each designed to handle particular user intents or questions. For example, you might have topics around booking appointments, answering product FAQs, or troubleshooting common issues. Defining clear and targeted topics helps your agent quickly detect what the user wants, allowing it to deliver focused and accurate responses, resulting in more natural and satisfying interactions.

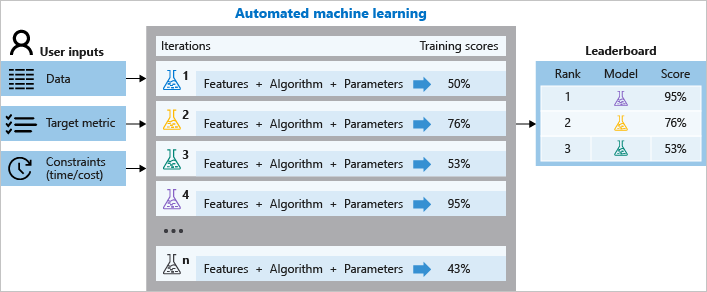

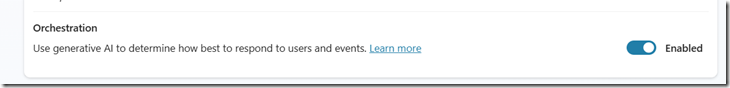

What is orchestration?

Orchestration in Microsoft Copilot Studio is the process of intelligently managing and guiding conversational flows across different topics and actions to ensure smooth, logical, and context-aware interactions. Think of orchestration as the conversation conductor, seamlessly deciding when and how to transition between different topics, invoking the appropriate actions, and maintaining the context throughout the dialogue. Good orchestration ensures that your AI agent can handle complex user journeys, adapt dynamically to user inputs, and deliver coherent, engaging, and human-like conversational experiences.

As illustrated in the example above, orchestration makes decisions on which topic to navigate to next, based on the current context of the conversation. Effective orchestration ensures that your AI agent can handle complex user journeys, adapt dynamically to user inputs, and deliver coherent, engaging, and human-like conversational experiences.

What is action?

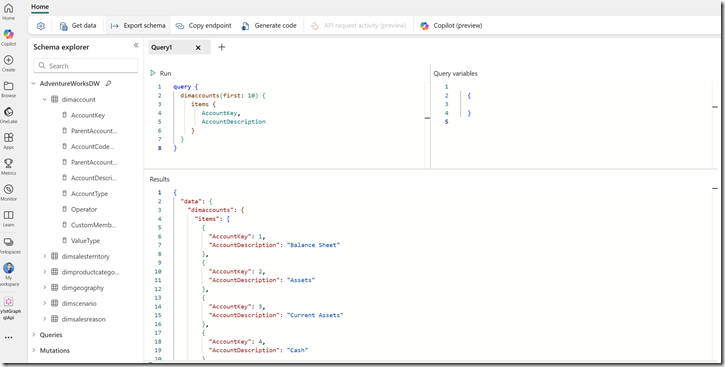

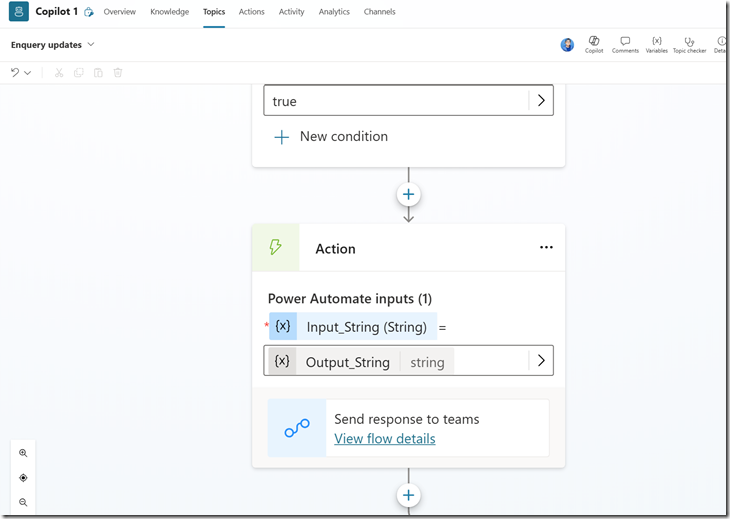

An action in Microsoft Copilot Studio is a powerful capability that allows your conversational agent to interact with external systems, APIs, or backend processes to execute tasks or retrieve dynamic information. Actions extend the functionality of your AI agent beyond static responses, enabling it to perform real-world operations like checking inventory, scheduling meetings, processing orders, or pulling up personalized user data. By integrating actions, your conversational experiences become more meaningful, relevant, and capable of addressing users' real-time needs directly within the conversation.

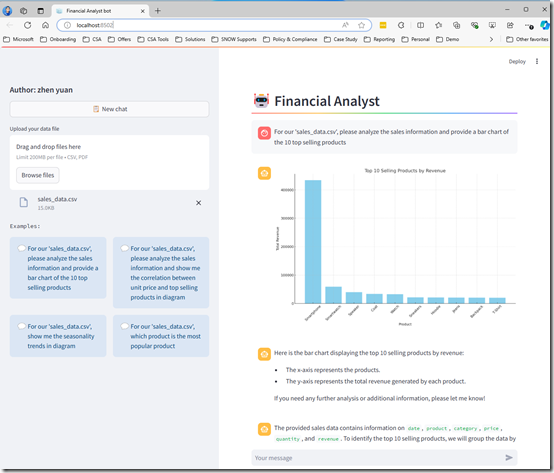

Now that we've explored the concepts of topics, orchestration, and actions in Microsoft Copilot Studio, let's dive in together to see how you can apply them practically and elevate your bot conversations to the next level.

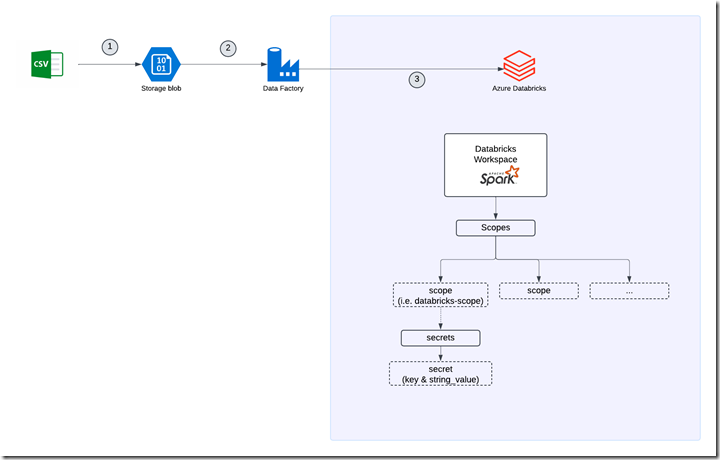

In this demo, I'll showcase a practical use case: building a Copilot agent designed to keep my team updated with the latest azure updates.

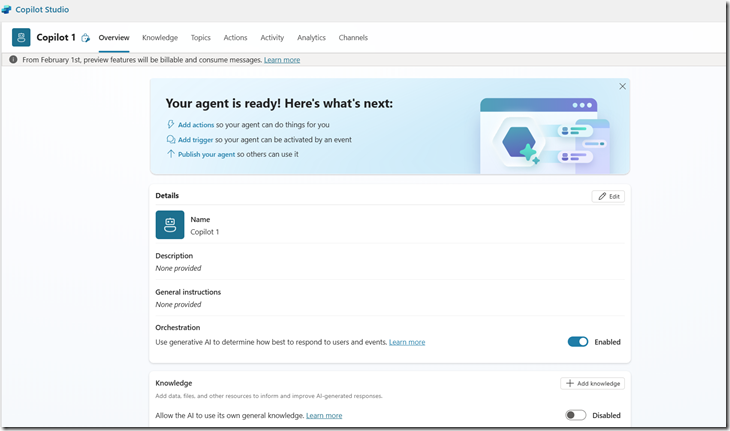

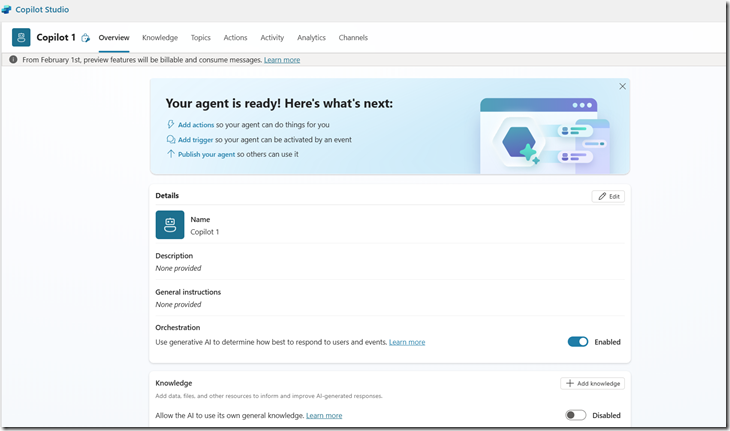

Step one: Create copilot in Microsoft Copilot Studio

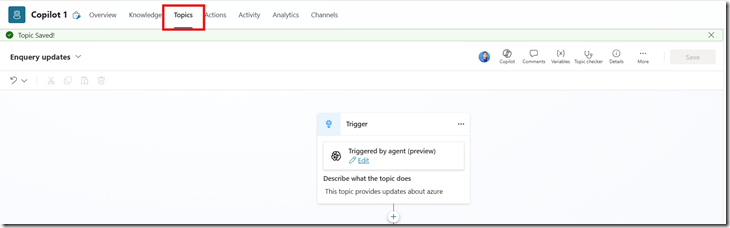

Step Two: Create topic

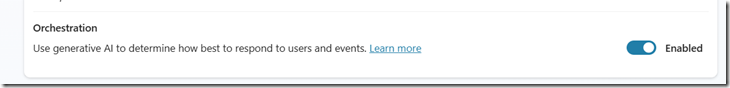

Step Three:Enable AI Orchestration

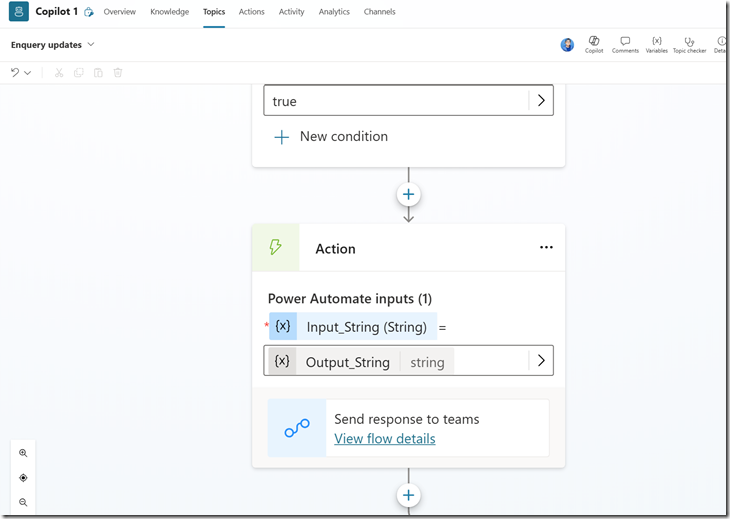

Step Four: Create action to integrate with Teams.

Let’s check it out the full demo here.

https://youtu.be/b3axMOtt8yk?feature=shared

Once you’ve done the above, you will receive a notification in Teams

Conclusion

In conclusion, harnessing the power of topics, orchestration, and actions in Microsoft Copilot Studio allows you to create sophisticated, context-aware, and highly interactive conversational agents. By strategically defining clear conversational paths with topics, smoothly managing the dialogue flow with intelligent orchestration, and integrating practical actions for real-world functionality, you can significantly enhance user experience and boost productivity. Now, equipped with these insights, you're ready to build smarter, more dynamic AI-driven interactions and elevate your agent to the next level. Happy building!